The Oversight Board of Facebook – what do we need to know about Facebook’s new regulatory body?

Oversight Board is an independent body established by Facebook, which reviews Facebook’s decisions to remove content (posts, comments, pages, groups, accounts).

Following the 2016 U.S. presidential election, Facebook management initiated discussions with different groups of academics and advocates about how to improve safety of the platform.[1] Later, Zuckerberg announced that Facebook would create an oversight body to help the company govern content. According to him, Facebook as a company should not make decisions about freedom of expression and online safety. In January 2019, Vice President Nick Clegg published additional details about activities of the oversight body, the scope of its power and creation of the Charter.

The company’s initiative was met with mixed public reactions.[2] Some were concerned that the Oversight Board would exercise censorship over user speech and block governments from regulating Facebook. Others saw the Oversight Board as a PR move that might never become reality, while some worried that Facebook would use the board as a scapegoat, allowing the platform to divert responsibility in the face of public criticism.[3]

Eventually, the Oversight Board was formed in 2019 and it is composed of 40 members. Facebook decided to select journalists, lawyers, professors and former ministers from all continents of the world as the Oversight Board members. They will review cases based on the principle of rotation, in panels comprising of five members. The Board began reviewing cases in October 2020 and it published its first decisions several weeks ago.

Why is the oversight body necessary?

Digital progress has dramatically changed ways of receiving and publishing information. Facebook that started as a platform for sharing information among friends has become an important digital political, social and economic instrument; apart from sharing information, different groups are increasingly using the platform to influence public opinion, including through disinformation, hate speech and other malicious methods.

To address the problem, every year Facebook comes out with new methods for improving content governance, establishes overarching policy based on fundamental human rights and national laws, reviews content that runs against the policy through 15,000 moderators, cooperates with local fact-checking organizations, in order to make right decisions based on local political and cultural context. According to Facebook, establishment of the independent and neutral Oversight Board will be a step forward for protection of freedom of expression of Facebook users.

„As its community grew to more than 2 billion people, it became increasingly clear to the Facebook company that it shouldn’t be making so many decisions about speech and online safety on its own,“ – says the Oversight Board website.

Photo: http://oversightboard.com

What does the Oversight Board review?

The Oversight Board announced in October 2020 that it would be accepting cases for review. Since then, it has received 180 000 applications. Both ordinary users of Facebook as well as coordinated inauthentic networks removed by Facebook can apply to the Board to appeal Facebook’s decision to remove content and have the content restored if the Board finds that their behavior and/or posts are not in conflict with Facebook’s regulations. Notably, over the last year Facebook has removed more than thousand pages, accounts and groups in Georgia.

Among decisions published by the Board[4], six have reversed Facebook’s decision to take down the content, requiring the post to be restored. High-profile cases to be decided by the Board in the future include the issue of removed Facebook and Instagram accounts of former U.S. President Donald Trump. The case is currently pending and is open for public comments, where users can express their opinion about cases to be reviewed by the Board.

Below are two examples of decisions made by the Board – one requires Facebook to restore the post and another upholds Facebook’s decision to take down the content.

- Decision of the Board ordering Facebook to restore the post: Case Decision 2020-005-FB-UA

In October 2020, a user posted a quote which was attributed by Facebook to Joseph Goebbels, the Reich Minister of Propaganda in Nazi Germany and was removed for violating its Community Standard on Dangerous Individuals and Organizations. According to the quote, rather than appealing to intellectuals, arguments should appeal to emotions and instincts. The quote stated that truth does not matter and is subordinate to tactics and psychology. There were no pictures of Joseph Goebbels or Nazi symbols in the post. In their statement to the Board, the user said that their intent was to draw a comparison between the sentiment in the quote and the presidency of Donald Trump.

In its response to the Board, Facebook confirmed that Joseph Goebbels is on the company’s list of dangerous individuals. It claimed that the quote expressed Goebbels’ ideas and it did not see additional context provided by the user to make their intent explicit.

The Board found that the quote did not support the Nazi party’s ideology or the regime’s acts of hate and violence and it sought to compare the presidency of Donald Trump to the Nazi regime.

According to the Board, under international human rights standards, any rules which restrict freedom of expression must be clear, precise and publicly accessible, so that individuals can conduct themselves accordingly. Facebook’s regulations do not explain the meaning of “support,” and Facebook does not provide a public list of dangerous individuals and organizations. The Board found that in this case, the user could not have known that it was violating Facebook’s Community Standard.

The Oversight Board ordered Facebook to restore the post. It also provided relevant recommendations to improve Facebook regulations and policy.

- Decision of the Board upholding Facebook’s decision to take down the post: Case Decision 2020-003-FB-UA

In November 2020, a user posted content which included historical photos described as showing churches in Baku, Azerbaijan. The accompanying text in Russian claimed that Armenians built Baku and that this heritage, including the churches, has been destroyed. The user used a derogatory term “тазики” to describe Azerbaijanis.

The user included hashtags in the post calling for an end to Azerbaijani aggression and vandalism. Another hashtag called for the recognition of Artsakh (the Armenian name for the Nagorno-Karabakh region) which is at the center of the conflict between Armenia and Azerbaijan. The post received more than 45,000 views and was posted during the recent armed conflict between the two countries.

Facebook removed the post for violating its Community Standard on Hate Speech, claiming the post used a slur to describe a group of people based on a protected characteristic (national origin). The post used the term "тазики" to describe Azerbaijanis, which can be translated literally from Russian as “wash bowl,”. The term was featured on Facebook’s list of slur terms.

Independent linguistic analysis commissioned on behalf of the Board confirms Facebook’s understanding of "тазики" as a dehumanizing slur attacking national origin. The Board therefore upheld Facebook’s decision to remove the post. The Board found Facebook’s values include “Safety” and “Dignity“, which rules out allowing such content on the platform. Some members of the Board found that Facebook’s deletion of the post was not proportionate as a punitive measure.

How does the Oversight Board work?

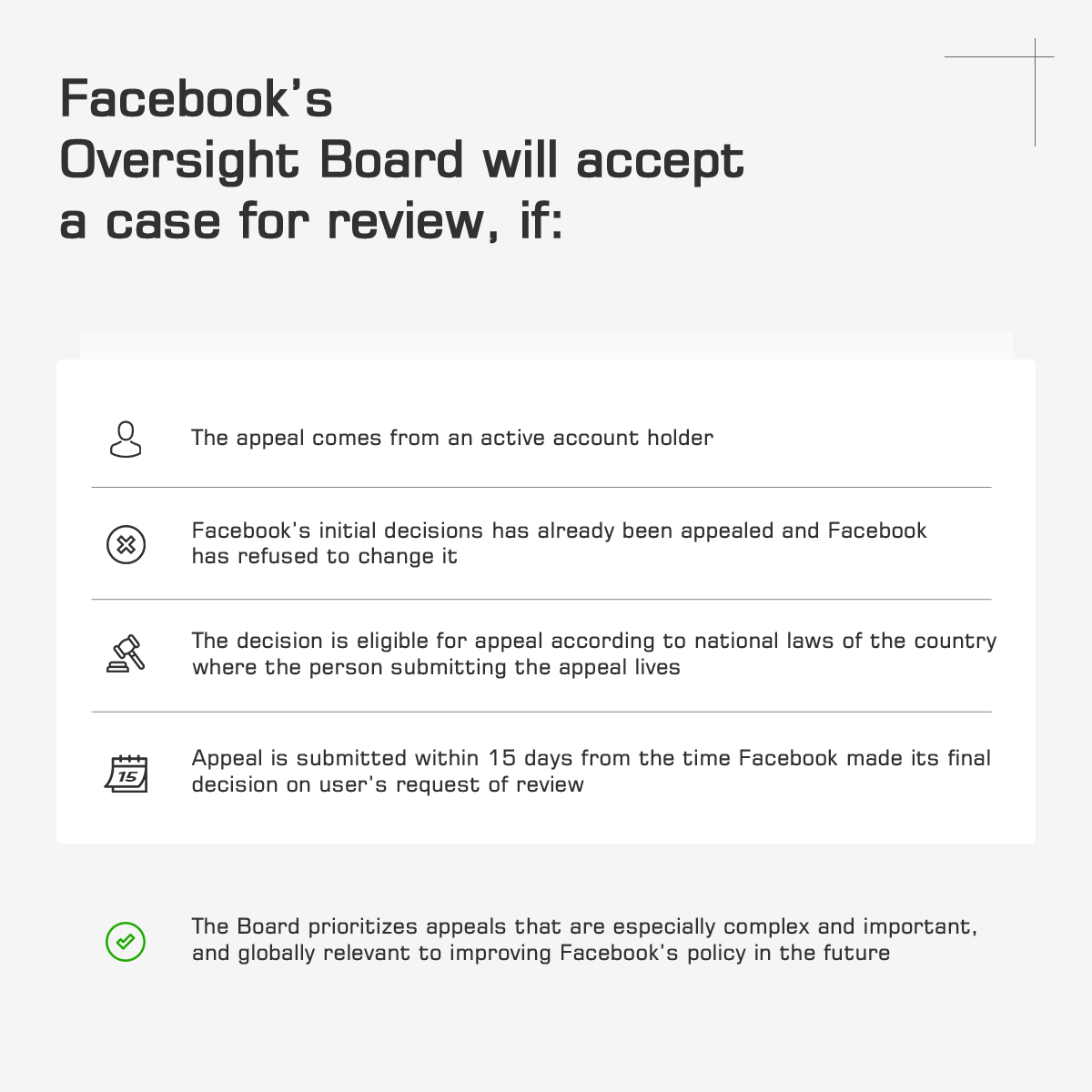

If Facebook or Instagram made a decision to remove a particular content (post, comment, page, group, report) and reviewed the user’s appeal but a decision on removal remained in effect, the decision can be challenged before the Oversight Board. To that end, the person should submit an online appeal to the Board. In order for the appeal to be admitted by the Board, the following conditions must be met:

- The appeal must come from an active account holder;

- Facebook must have already reviewed its initial decision and refused to change it;

- Content decisions must be eligible for appeal according to national laws of the country where the person submitting the appeal lives;

- Appeals must be submitted within 15 days from the time Facebook makes its final decision on user’s request of review.

The Board prioritizes appeals that are especially complex and important, or relevant for improving Facebook’s policy. If the Board decides to review an appeal, it will make its final decision no later than within 90 days.

According to the Board, decisions will be made based on Facebook values, which include: authenticity, safety, protection of personal data, protection of human dignity and freedom of expression.

Before starting to review a case, the Board will publish details of the case on its website. The case will also be open for public comments. The comments will then be used by the Board in the process of deliberation.

Final decision of the Board will be made publicly available and corresponding recommendations will be sent to Facebook. The Board’s resolution of each case will be binding and Facebook will be required to implement it and restore content, if the Board demands it.

What legislation does the Oversight Board use?

Although the Oversight Board does not have a court mandate, its decisions in fact affect freedom of expression.

According to Article 2(2) of the Board’s Charter[5] that concerns decision-making, the board will review content enforcement decisions and determine whether they were consistent with Facebook’s content policies and values.

Additionally, for each decision, any prior board decisions will have precedential value and should be viewed as highly persuasive when the facts, applicable policies, or other factors are substantially similar.

According to the same article, when reviewing decisions, the board will pay particular attention to the impact of removing content in light of human rights norms protecting free expression.

This norm does not provide any hierarchy about which sources of law should take precedence in the decision-making process, however the spirit of the Charter and the analysis of reasoning of the Board’s decisions suggests that the Board first of all assesses compliance Facebook’s content decisions with its own standards, followed by assessment of the decision’s compliance with the international human rights law.

According to Evelyn Dueck[6], Lecturer on Law and S.J.D. candidate at Harvard Law School, one of the key questions for Facebook Oversight Board watchers has been what source of law the Board will apply. The Board is not an actual court and it has no legal mandate. Its Charter states that it will review content enforcement decisions and determine whether they were consistent with Facebook’s content policies and values. The Board pays particular attention to the impact of removing content in light of human rights norms protecting free expression. According to Dueck, this is somewhat awkward mix of authorities. It is not clear how the Board should reconcile applying Facebook’s private set of rules and values with paying attention to international human rights law.

Suspicions and skepticism are often expressed about the Board’s independence. Notably, Facebook has allocated $130 million to fund the Independent Board, which according to experts, calls the Board’s independence into question. In addition, critics believe that the Independent Board’s powers are very limited and it will not be given an opportunity to influence Facebook’s root problems like manipulation of voters using information and interference of foreign actors with internal political issues.

Facebook has made an unprecedented move by creating an oversight body and this may prompt other companies to create regulatory institutions to oversee content governance by social media companies, based on the principle of neutrality. However, evaluation of the scope of the Board’s impact and powers will be possible after the Board’s future decisions, especially on cases that involve moderation of not only the platform’s content but also its algorithm.

----

[1] Kate Klonick, The Facebook Oversight Board: Creating an Independent Institution to Adjudicate Online Free expression; https://www.yalelawjournal.org/feature/the-facebook-oversight-board

[2] ibid.

[3] Josh Constine, Toothless: Facebook Proposes a Weak Oversight Board, TECHCRUNCH https://techcrunch.com/2020/01/28/under-consideration/

[4] Oversight Board has reviewed 7 cases so far: https://oversightboard.com/decision/

[5] Charter of the Oversight Board; Article 2.2. https://oversightboard.com/governance/